My Approach to Agentic AI

With my efforts at work to get into Gen AI, I had created an AWS Lambda that used the Node.js sdk to interact with Amazon Bedrock. The Lambda provides to endpoints: one that uses the ConverseCommand, and the other the RetrieveAndGenerateCommand. The latter is designed to use an Amazon Bedrock Knowledge Base for the RAG to get retrieve answers to questions. After spending some time working on this refining things like prompts to generate the desired responses, I was directed to follow an Agentic approach instead.

As part of my agentic approach, I have created a Lambda that provides and interface for interacting with Amazon Bedrock Agents. This Lambda

takes in an Agent's details and calls the desired Agent. I knew that other engineers would soon be working with Bedrock Agents soon so

I wanted to provide a simple service they could call and get the responses they need. The Lambda handles all the credentials necessary to

Invoke the Agent and then process it and return a string back to the client for processing.

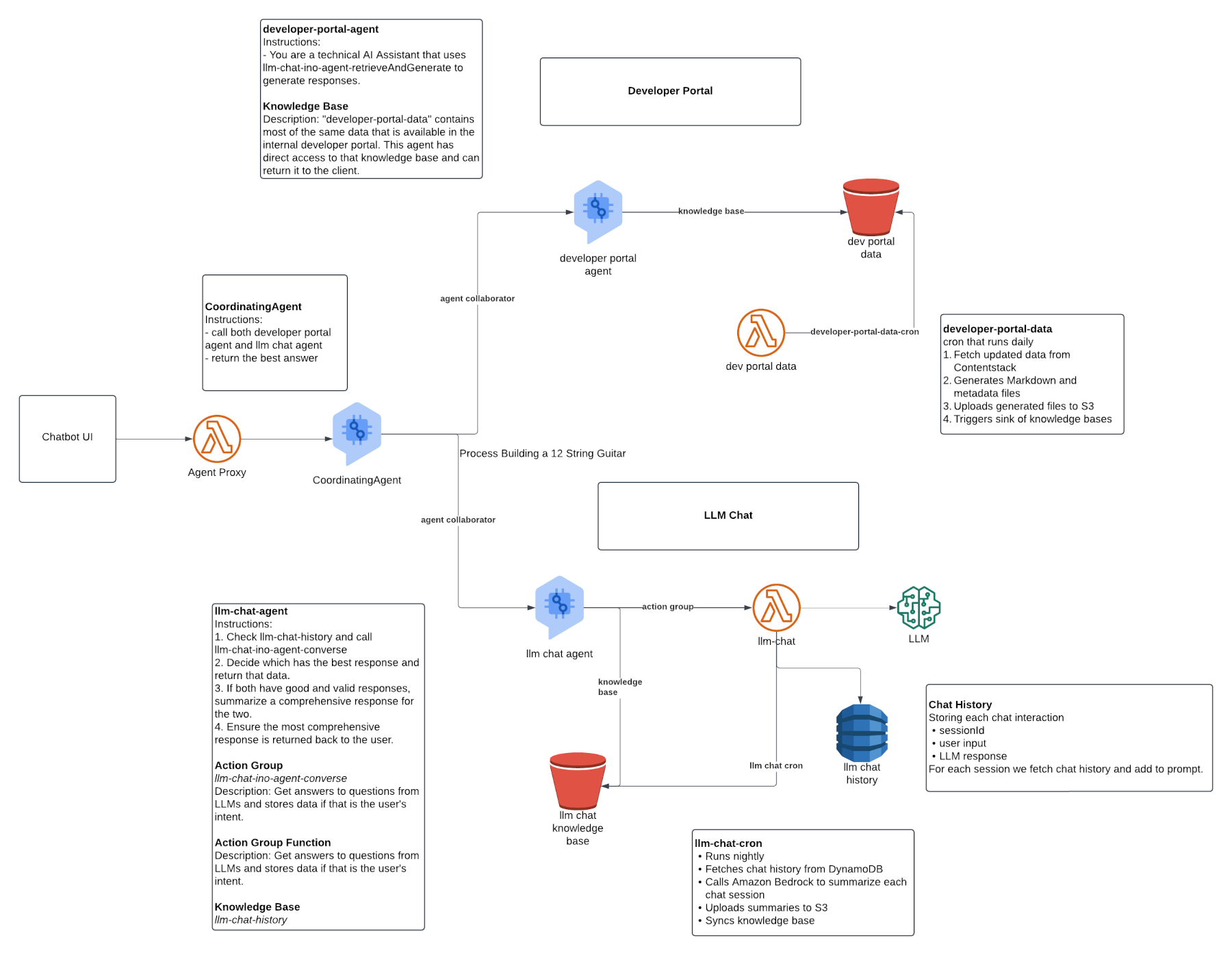

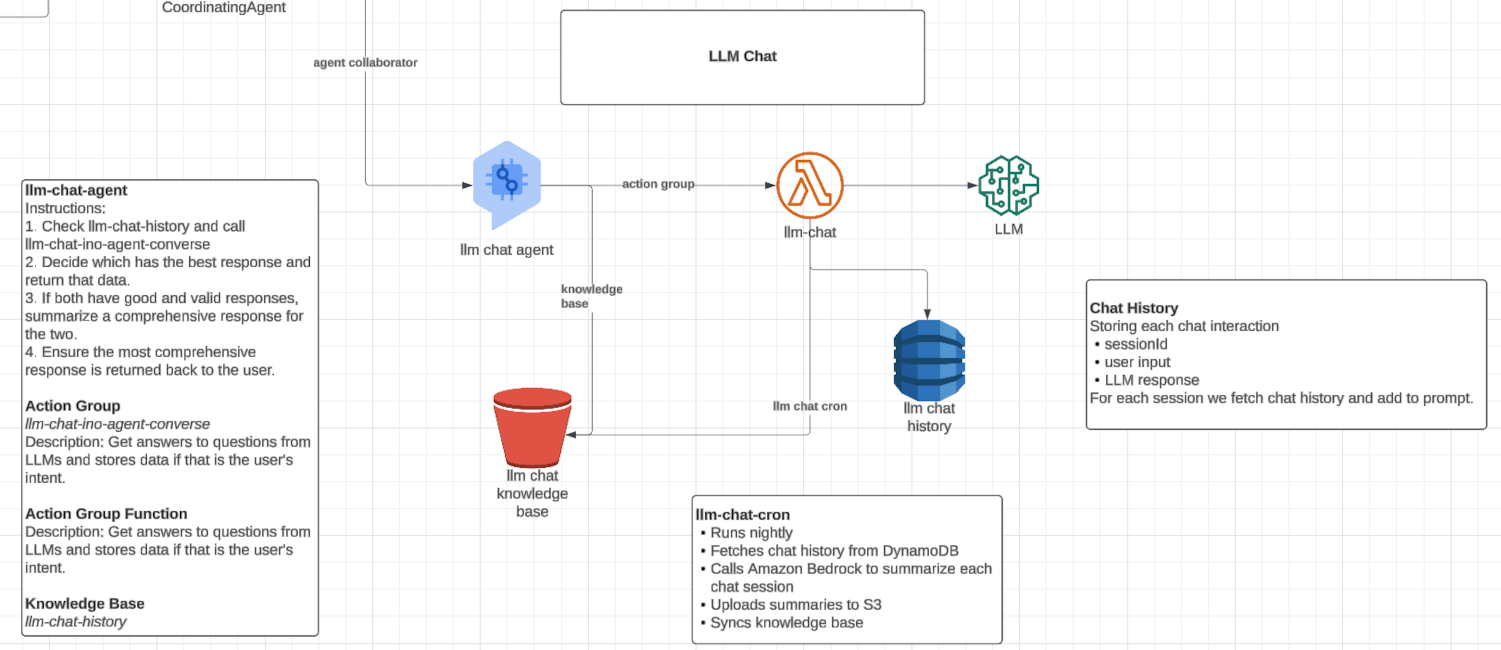

The following represents the architecture I have built out as of today.

I have a NextJS Chatbot front end application that calls the agent-proxy to call the CoordinatingAgent. The CoordinatingAgent has two

agent collaborators: one for internal developer portal data and one for retrieving general purpose answers and tracking chat history.

Let's walk through this.

Internal Developer Portal Data

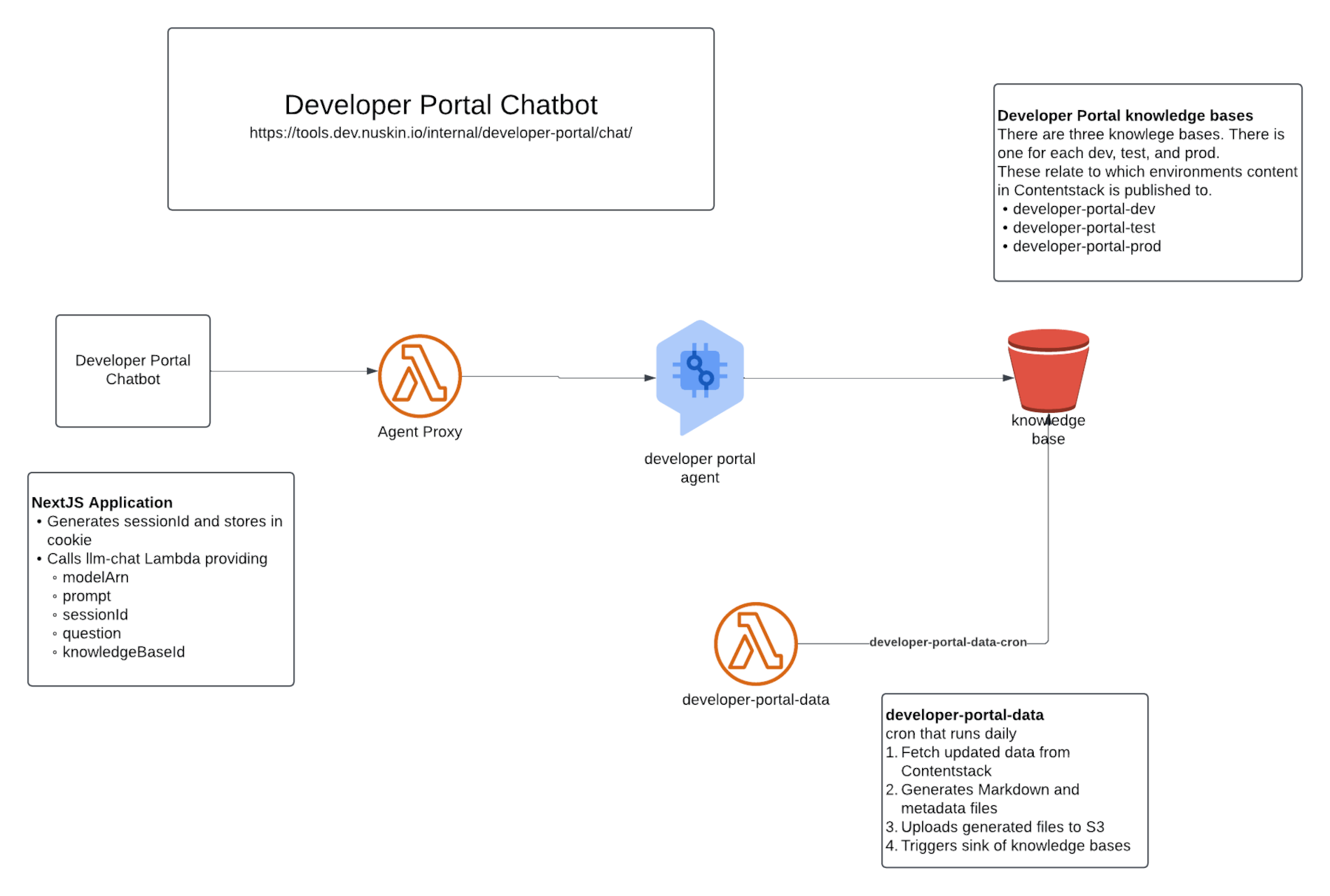

I have outlined the Internal Developer Portal in the Portfolio portion of my site. The dev portal has it's own chatbot

where users can ask questions about our company's APIs, Applications, SDKs, IaCs, Vendors, and our standards required for developing projects

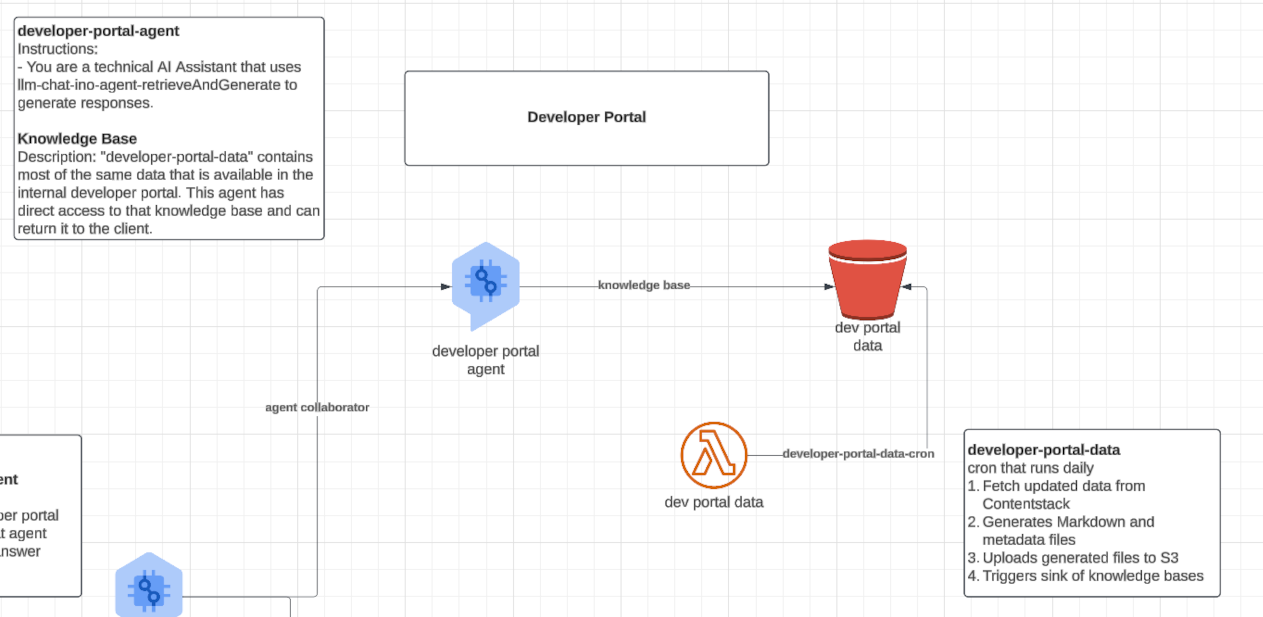

in the way we prescribe. This chatbot calls the Lambda I mentioned above. I have also created a Lambda function that acts as a cron running daily.

This cron gathers all the latest data it uses to build out the dev portal. It processes the data, generating Markdown files and metadata.json files.

Once these are generated, the files are uploaded to S3. The Bedrock Knowledge Base for the dev portal data points at that S3 bucket. Once the S3

bucket is updated the cron triggers the knowledge base to sync using the StartIngestionJobCommand from the AWS SDK.

For the Agentic approach laid out in this post I have created a Bedrock Agent that uses the same knowledge base the internal developer portal uses. When the CoordinatingAgent receives requests about our data related to the kinds of things in the developer portal, it will use the developer-portal-agent to retrieve that data and return it back to the client. Yes, I know the naming conventions are not consistent. Don't judge. Naming stuff remains one of the toughest things in software development.

This image of the Developer Portal Chatbot architecture represents how most people access the data, from the developer portal itself. Although one can use the main chatbot to access this data, the most effective way is to get on the developer portal and use the build in chatbot UI.

LLM Chat

The llm-chat-agent has more going on with it. The agent has a Bedrock knowledge base and an action group which is a Lambda that it can invoke. The functionality the Lambda provides the process for tracking chat histories and session tracking. When a request from the Chatbot it received by the CoordinatingAgent and it has nothing to do with the developer portal, the llm-chat-agent invokes a Lambda that invokes a Bedrock Agent. The biggest issue we have seen is calls timing out. AWS Lambda's have a timeout for API endpoints of 30 seconds which means if it takes too long from the time the agent-proxy Lambda is called to the Agent processing the message using knowledge bases and action groups, it can take longer than what is possible.

Departing thoughts

Designing this system is a change in process. I started writing this post a couple of weeks ago and I've changed it several times because my approach keeps changing due to changing requirements. I'll be honest as well when I say there are disagreements about what Agentic AI actually means. When I have asked my AI chatbot what Agentic AI is, it doesn't say anything about a chatbot hooking up to a Bedrock Agent and asking it questions. When I've really got into discussions about Agentic AI, I've gotten the impression that it's meant for performing complex processes that are repeatable and happen regularly. The only reason I've started using Bedrock Agents is because I was told to.

I'd argue that using Agents to process requests and questions like in the use case of a chatbot, while easy, I believe it's unnecessary. It adds a layer of complexity that slows the process. These are the three approaches I've worked through with implementing chatbots.

- Use the AWS SDK directly from the UI to interact with LLMs available in Bedrock.

- Call an AWS Lambda from the UI which uses the AWS SDK to interact with LLMs available in Bedrock.

- Call an AWS Bedrock Agent from the UI which uses a combination of knowledge bases and action groups (Agent has LLM attached to it).

- Call an AWS Lambda from the UI to interact with the Bedrock Agent.

For numer 1, although fastest to respond, I didn't like it because it exposed credentials for Amazon Bedrock on the front end which means this isn't something we could build for external use.

For number 2, calling a Lambda that fronts Amazon Bedrock really wasn't noticably slower than using the SDK directly on the front end. This was actually my preferred method for the simple chatbot use case. I didn't experience any issues with timing out even using the large Claude Sonnet LLM.

For number 3, this was slower than the first two and I began to have timeout issues on the front end because I set limits as to how long to wait for a response from the Agent. I always add retry logic in certain cases so most of the time this I got answers back. I did have to update my timeout default to 60 seconds to ensure I got a valid response. I never liked this and it always felt to slow.

For number 4, this has been the most frustrating. As I stated earlier, the hard timeout of AWS Lambda API endpoints has caused me all kinds of frustration. By in large, I get the responses back in time, but if the question requires more processing power like a complex code example it is not unusual for it to fail. If I get lucky then I can still get the answer even if it fails on two tries in a row. After a third failed attempt I stop retrying the request and return an error back to the user. This leads me to believe that I will probably need to move in the using Websockets to make these requests. If that is successful I'll created a post for that as well.

Keep in mind, if you are actually reading this, that I am still very new to all of this and have not had any formal training. I am figuring this out as I go as I attempt to wrap my mind around how Gen AI works and what it means for me and my future. Creating this post is merely my way of documenting my journey as I move forward in this endeavor to figure out how AI can benefit my organization and company moving forward.